Key Takeaways

1. The graphics card operates at game clock speeds between 3.4 to 3.7 GHz and features a 256-bit GDDR7 memory interface with up to 32 GB of VRAM options (16 GB and 24 GB also available).

2. It shows a 28% average performance increase at 4K resolution over the RX 9070 XT, with certain workloads achieving up to a 45% boost, positioning it against the RTX 5080 Super and possibly the RTX 4090.

3. The GPU is likely based on an enhanced Navi 48 architecture, focusing on higher clock speeds and memory bandwidth without major redesigns, and may utilize advanced TSMC process nodes.

4. AMD may adopt a strategy similar to the Radeon VII, potentially delaying the RX 9080 XT ES launch until a favorable market opportunity arises or competitive pressure increases.

5. The launch timing could depend on the readiness of FSR4 (Redstone) and other strategic factors to ensure a competitive high-end product.

Internal AMD lab tests have indicated that the graphics card operates at game clock speeds ranging from 3.4 to 3.7 GHz. This GPU is equipped with a 256-bit GDDR7 memory interface, and it can come with memory configurations of up to 32 GB of VRAM. There are also 16 GB and 24 GB memory options available. In terms of power consumption, the card exceeds 450 watts when under load, with some versions going beyond 500 watts when overclocked.

Performance Benchmarks

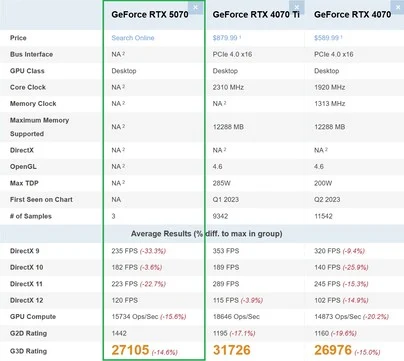

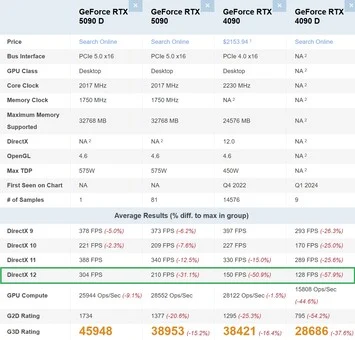

Early benchmark information shared privately with Moore’s Law Is Dead suggests that the performance at 4K resolution shows an average increase of 28% over the RX 9070 XT, with certain workloads reaching up to a 45% boost. This performance level puts the RX 9080 XT ES in direct competition with the RTX 5080 Super and possibly even the RTX 4090, depending on how it is implemented and its final clock speeds. However, it’s important to note that this is all still speculative.

Architecture Insights

The GPU is likely based on an enhanced Navi 48 architecture, and it seems that AMD is not planning any drastic redesigns. Rather, the company appears to be focusing on achieving higher clock speeds and increased memory bandwidth while maintaining a conventional monolithic design. It may also utilize TSMC’s N4X or even N3X process nodes to reach the reported performance levels. AMD has publicly laid out a roadmap that includes N2X and 1.4 nm nodes for future CPUs, making the usage of advanced nodes likely.

Strategic Decisions Ahead

Moore’s Law Is Dead suggests that the RX 9080 XT ES might adopt a strategy akin to that of the Radeon VII, which utilized a die-shrunk version of Vega to proactively counter NVIDIA’s shift to 7 nm technology. However, AMD has not yet confirmed any plans for a launch. The engineering sample may stay within the company unless AMD finds a suitable market opportunity or faces competitive pressure that necessitates a release.

The ultimate choice could depend on the readiness of FSR4 (Redstone) along with various strategic factors. Multiple sources point to AMD potentially waiting to launch the RX 9080 XT alongside enhanced upscaling technology to deliver a truly competitive high-end product.

Source:

Link