Samsung Galaxy S24 Series: Exciting AI Features Revealed!

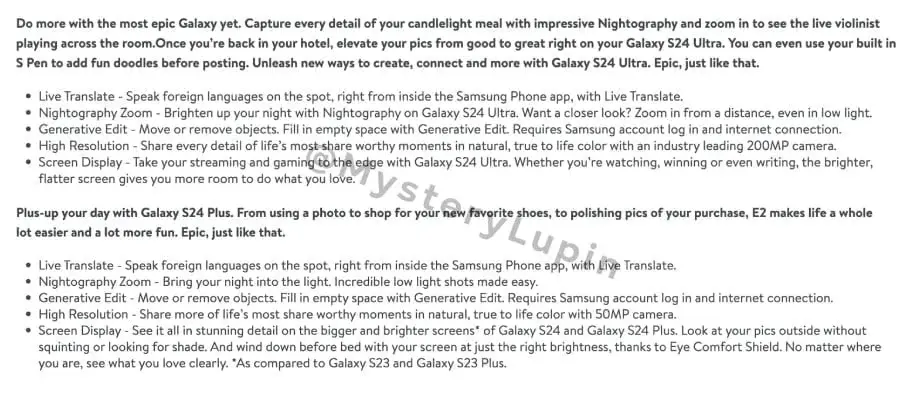

With the highly anticipated launch event of Samsung’s Galaxy S24 series just around the corner, new details about the phones have been slowly emerging. Twitter user @MysteryLupin recently shared some intriguing marketing material that highlights the key AI features of the upcoming Samsung Galaxy S24 series.

Live Translate: Breaking Down Language Barriers

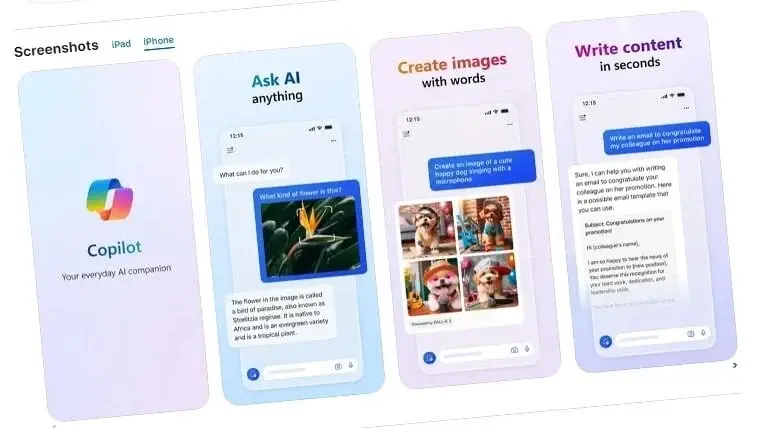

One of the exciting AI features that will be introduced in the Galaxy S24 series is Live Translate. This feature aims to revolutionize phone calls by providing real-time translation, potentially eliminating language barriers during conversations. With Live Translate, users will be able to have seamless and meaningful conversations with individuals who speak different languages.

Generative Edit: Enhancing Your Photos

Another noteworthy feature is “Generative Edit,” which bears a striking resemblance to Google’s Magic Editor tool. With Generative Edit, users will have the ability to effortlessly remove unwanted objects or fill in empty spaces in their photos. This feature brings a new level of convenience and creativity to smartphone photography, allowing users to perfect their shots with ease.

Cloud Dependency for Generative Edit

However, it is important to note that just like Google’s Magic Editor, Generative Edit on the Galaxy S24 series requires an internet connection and a Samsung account. Although the powerful Snapdragon 8 Gen 3 processor of the Galaxy S24 series boasts on-device AI capabilities, the Generative Edit feature still relies on cloud computing for optimal performance.

It’s evident that Samsung is pushing the boundaries of AI technology with the Galaxy S24 series. The inclusion of Live Translate and Generative Edit showcases Samsung’s commitment to providing innovative and user-friendly features that enhance the overall smartphone experience.

As we eagerly await the official unveiling of the Galaxy S24 series, these AI features certainly add to the anticipation and excitement surrounding Samsung’s upcoming flagship devices. Stay tuned for more updates on the Samsung Galaxy S24 series as we approach the launch event.