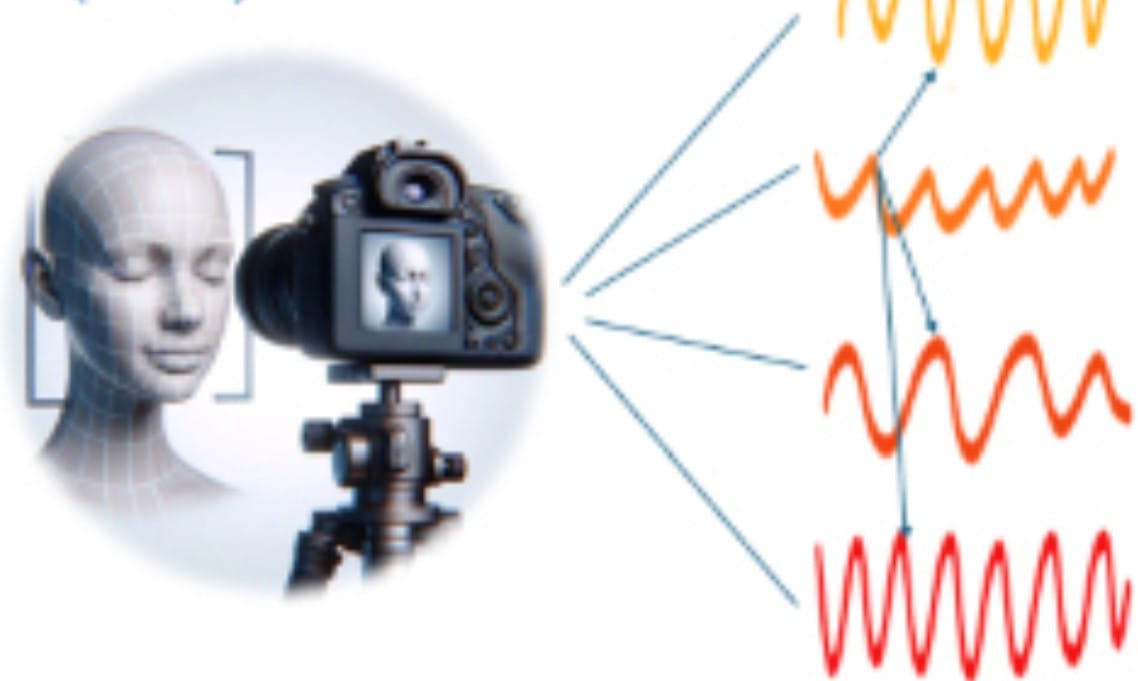

Researchers at the University of Tokyo in Japan have developed a prototype that uses an optical, non-contact technique to identify hypertension and diabetes. They utilized a multispectral camera aimed at the faces of trial participants to observe changes in blood flow, which was then processed by AI software.

Advanced Imaging Technology

While the human eye is unable to detect subtle changes in blood flow throughout the body, multispectral cameras excel in this area as they can see infrared and ultraviolet light. Their ability to perceive these slight variations is notably superior. However, identifying health issues using this technology is challenging without the support of AI, due to the significant thermal noise and other complicating factors that hinder image data analysis through conventional methods.

AI Training and Accuracy

The AI software was trained by correlating images with actual blood measurements taken simultaneously, enabling it to predict vital health indicators with considerable accuracy.

Detecting diabetes is particularly crucial since it is a prevalent disease affecting more people globally. Often, it remains undiagnosed for many years, causing serious health complications. Therefore, early detection is essential for effective disease management. The AI could predict diabetes with an accuracy rate of 75.3%, focusing on key factors like blood glucose and A1C levels.

Impact of Hypertension on Health

As people age, blood pressure tends to increase, and a poor diet can contribute to heart issues that worsen this condition. Cardiovascular diseases are a leading cause of death in many developed countries, which is why early identification is vital. Such awareness can lead to effective management through medication, dietary changes, and physical activity. Impressively, the AI demonstrated an ability to predict hypertension with a high accuracy of 94.2%.

The researchers still need to conduct further tests and validations before launching any commercial products. In the meantime, individuals can take proactive measures for their health by using a blood pressure monitor with EKG capabilities (like this one available on Amazon) and investing in a quality pair of running shoes (like these found on Amazon) to maintain fitness and wellbeing.