Key Takeaways

1. AI-powered tools are attracting significant investor interest, but developing them can be costly.

2. OpenAI is seeking to reduce operating expenses by creating its own hardware instead of relying on Nvidia.

3. Samsung Foundry was considered for producing AI hardware, but OpenAI has chosen TSMC’s 3nm process instead.

4. OpenAI plans to mass-produce its AI chips by 2026, with designs nearing completion.

5. OpenAI is investing $500 million in its proprietary AI chip, aiming for long-term savings in operational costs.

Development of AI-powered tools appears to be a profitable venture. An increasing number of investors are keen on backing these kinds of projects. Nonetheless, creating AI products can be pretty costly if one aims to compete with top players in the market. OpenAI, which is the parent organization of ChatGPT, is fully aware of these financial challenges, prompting them to seek ways to reduce operating expenses.

OpenAI’s Strategy for Cost Reduction

As per various reports, one of the strategies OpenAI intends to adopt for long-term savings involves crafting its own hardware to manage AI services. At present, the company relies on Nvidia, which holds a dominant position as the leading supplier of AI hardware globally. Nevertheless, Nvidia’s stronghold allows it to dictate prices that some companies, including OpenAI, find excessive.

Samsung Foundry vs. TSMC

Samsung Foundry has surfaced as a key potential producer for OpenAI’s AI hardware after a dialogue took place between Samsung Electronics Chairman Jay Y. Lee and OpenAI CEO Sam Altman during the week of February 3 to February 9. Some insiders hinted that the production of OpenAI’s AI chips using Samsung’s 3nm process was among the matters discussed. However, a recent report from Reuters suggests that OpenAI has opted for TSMC’s 3nm process instead.

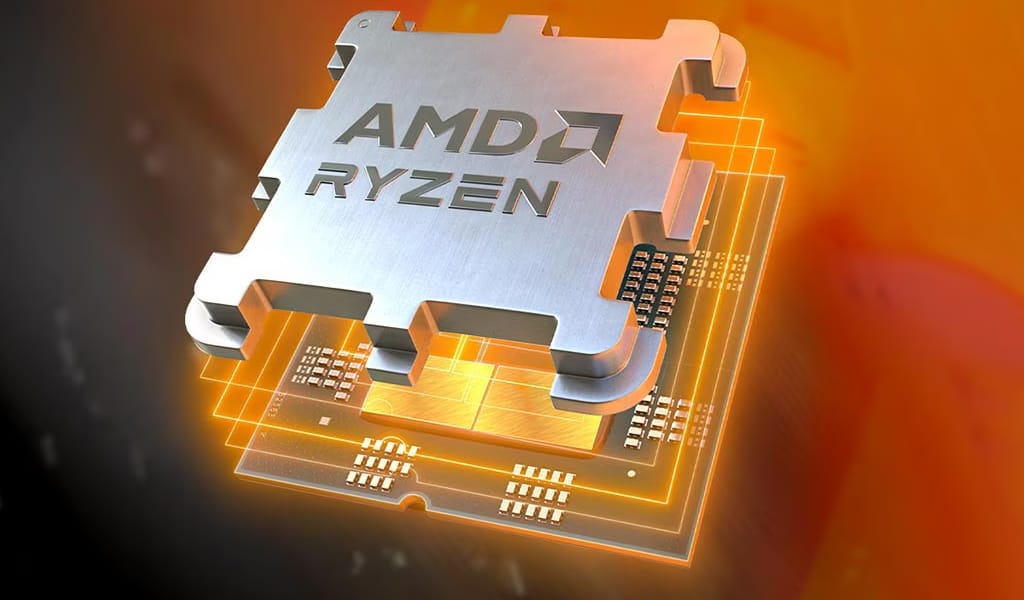

Future Plans and Investments

The AI-centric firm won’t be the first significant player to part ways with Samsung after encountering issues and dissatisfaction with its wafer performance. Other companies like Qualcomm and Nvidia have also transitioned to TSMC for their needs. OpenAI is said to aim for the mass production of its AI chips by 2026. In the near future, TSMC might receive designs from OpenAI to kick off production tests, with reports indicating that the hardware design is nearing completion.

OpenAI plans to invest approximately $500 million in the creation of its proprietary AI chip. Though this initial expense seems steep, the long-term savings in operational costs could be substantial. Apple previously took a similar path by moving away from Intel in favor of its own ARM chips for the Mac lineup.

Source:

Link