Key Takeaways

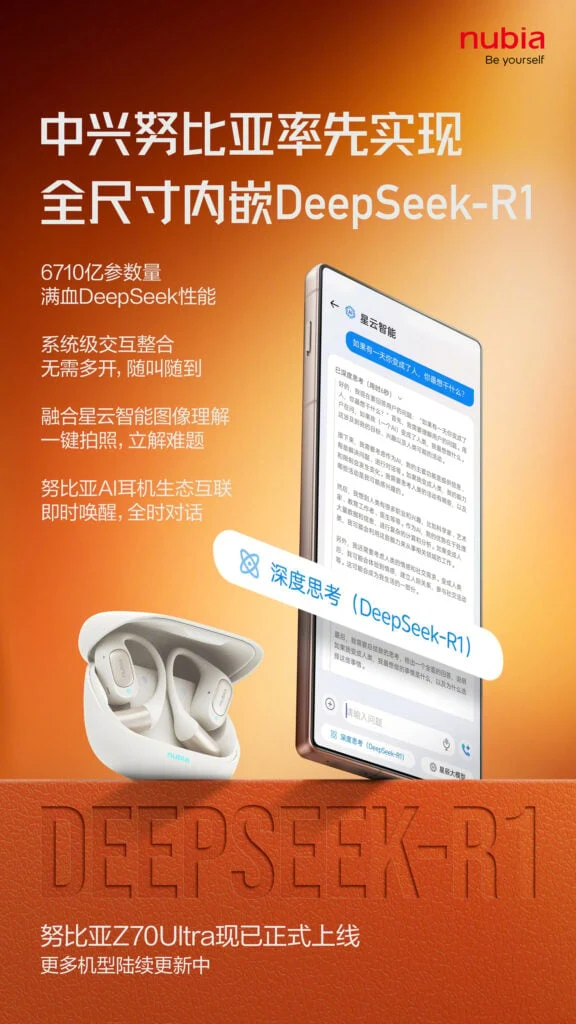

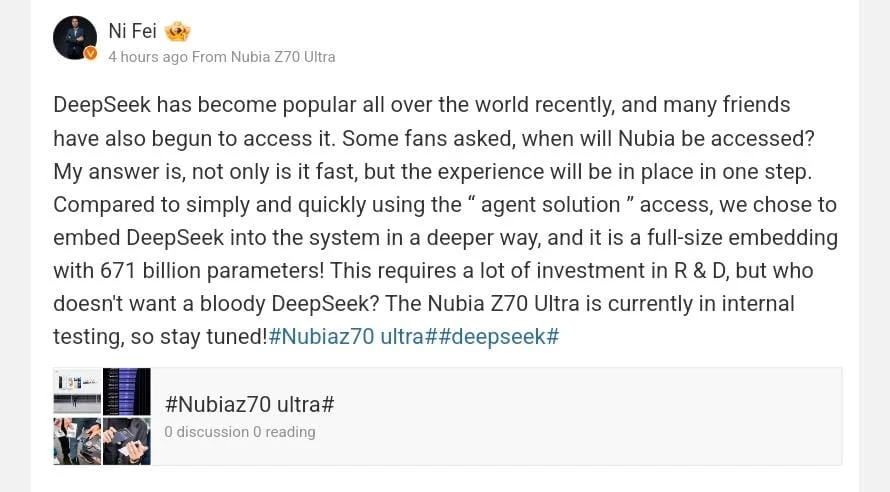

1. Nubia has launched the Nubia Z70 Ultra, the first smartphone fully integrating DeepSeek AI for seamless on-device interactions.

2. DeepSeek enables instant access to AI features without the need for cloud computing or separate apps, enhancing voice commands and image recognition capabilities.

3. A beta update has been released for Z70 Ultra users, improving DeepSeek functionality, optimizing the user interface, and fixing stability issues.

4. Other Chinese brands are exploring DeepSeek integration, with Samsung rumored to be considering it for its Galaxy smartphones to enhance local features.

5. The growing adoption of DeepSeek AI suggests it may become a standard feature in smartphones targeting the Chinese market.

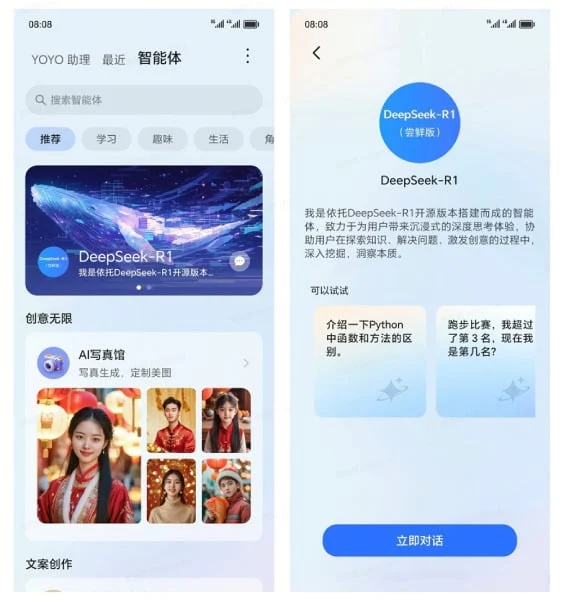

Nubia has introduced the first-ever smartphone that fully integrates DeepSeek AI, marking a significant advance in on-device artificial intelligence. The company claims that its flagship device, the Nubia Z70 Ultra, incorporates the DeepSeek model throughout the system, removing the need for separate applications and allowing for smooth AI interactions.

Instant Access to AI Features

Unlike typical AI assistants that depend on cloud computing or need specific apps, the Z70 Ultra’s DeepSeek integration provides users with immediate access to its features. This includes effortless voice assistant functions and Nebula AI-enabled image recognition, enabling users to take pictures that can analyze and resolve complicated issues in real time. Nubia is also broadening its AI ecosystem outside of smartphones, adding real-time activation in its earbuds for ongoing conversation support. Updates for other models will be coming soon.

Beta Update Released

Before today’s reveal, Nubia had started to distribute a beta update for Z70 Ultra users. This 126MB update improves the DeepSeek integration in both the standard and Starry Sky editions, enhancing voice assistant functions and optimizing Future Mode, which now automatically activates Nebula AI. Other enhancements consist of changes to the position of the search bar, fixes for app search redirections, and upgrades to the screen assistant’s user interface behavior. The update also addresses memory leaks within the Nebula Gravity system, tackling stability issues.

DeepSeek’s Growing Influence

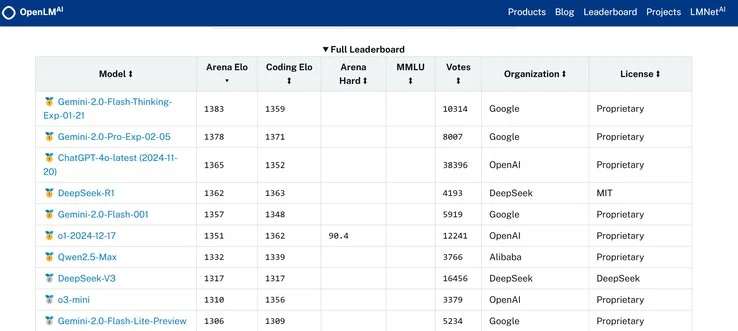

Nubia is not alone in its quest to integrate DeepSeek into its devices. Other Chinese brands are said to be working on similar features, while there are rumors that Samsung is investigating DeepSeek AI for its Galaxy smartphones in China. Leaker Ice Universe suggests that this could position DeepSeek as a local alternative to Google’s Gemini AI, enhancing One UI with region-specific AI features. In addition to its functionality, Samsung’s use of DeepSeek could help it navigate regulations in China and compete better against AI-focused competitors like Huawei. Although Samsung has not yet confirmed its intentions, the increasing adoption of DeepSeek indicates it may soon be a standard feature in smartphones aimed at the Chinese market.