Just days after reports emerged about an AI function causing issues by stripping clothes in Huawei smartphones, Apple is now in the spotlight for a similar reason (via 404 Media).

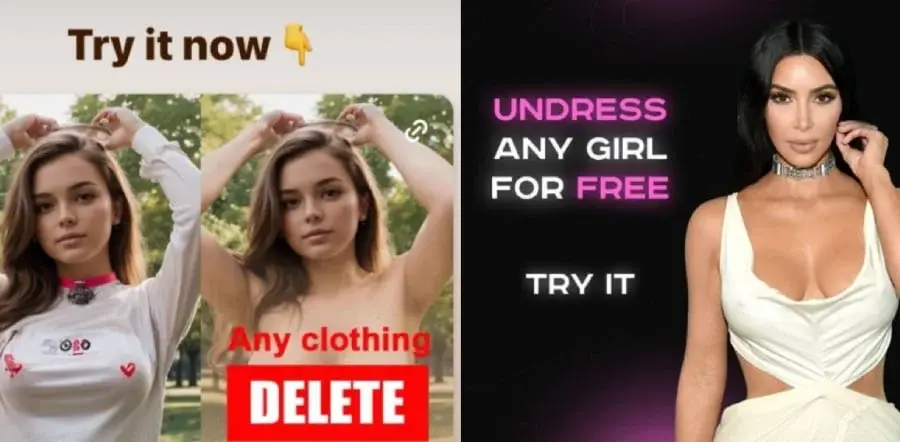

The tech giant has taken down three applications from its App Store that were marketed as "art generators" but were actually being promoted on Instagram and adult websites, claiming they could "strip any woman for free."

These applications utilized AI to produce fake nude photographs of clothed individuals. While the images don’t display real nudity, they can create pictures that might be utilized for harassment, extortion, and privacy violations.

Apple’s Response and Actions

Apple’s response to this issue came after 404 Media shared information about the applications and their advertisements. Surprisingly, these apps have been on the App Store since 2022, with their "undressing" feature being heavily promoted on adult websites.

The report indicates that these applications were permitted to remain on the App Store if they removed their ads from adult platforms. However, one of the applications continued to run ads until 2024, when Google removed it from the Play Store.

Implications and Concerns

Apple has now taken the step to remove these apps from its platform. The reactive nature of its app store moderation and the potential for developers to exploit loopholes raise concerns about the overall ecosystem.

This incident is particularly sensitive for Apple given the upcoming WWDC 2024, where significant AI announcements for iOS 18 and Siri are anticipated. Apple has been working on establishing a reputation for responsible AI development, including ethically licensing training data.

In contrast, Google and OpenAI are facing legal challenges for allegedly utilizing copyrighted content to train their AI systems. Apple’s delayed action in removing the NCI apps could potentially damage its carefully nurtured image.

Leave a Reply