Key Takeaways

1. In-memory computing is changing computer architecture by moving processing closer to memory for improved efficiency.

2. A new 2T1R memristor design enhances energy efficiency for AI and edge devices by minimizing sneak path currents and leakage.

3. The design supports analogue vector-matrix multiplication (VMM), crucial for machine learning applications.

4. It effectively addresses virtual ground issues and wire resistance to boost performance and reduce power usage.

5. This technology paves the way for faster, more integrated AI operations in memory, hinting at a future where hard drives could process information more intelligently.

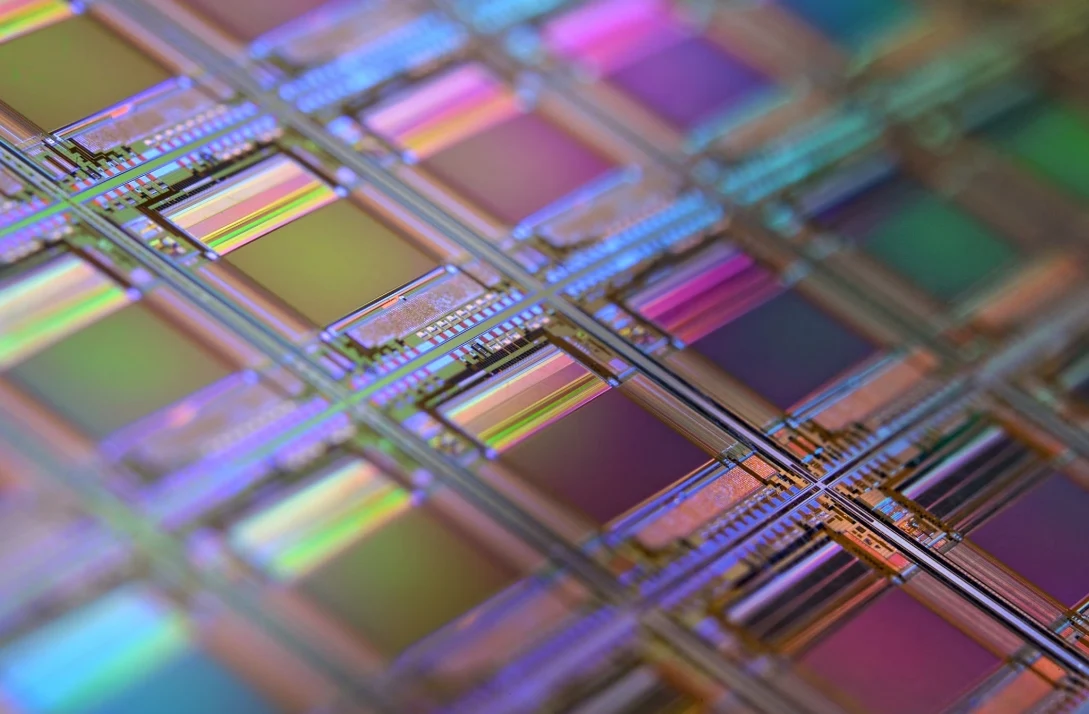

What if your hard drive did more than just store your files? Imagine it could think and react to information right where it’s located. This idea is part of in-memory computing, which is changing the way computers are built by moving processing closer to memory to improve efficiency.

New Memristor Design

Researchers from Forschungszentrum Jülich and the University of Duisburg-Essen have introduced a fresh design based on a 2T1R memristor. This innovation aims to facilitate a more energy-efficient approach for AI and edge devices.

The details of this design, shared on arXiv, include the integration of two transistors and one memristor in each cell, which helps regulate current to minimize sneak path currents—an issue often faced in memristor arrays. Unike regular memory, this new design connects both memristor terminals to ground when not in active use. This method could enhance signal stability and cut down on leakage.

Enhancing Machine Learning

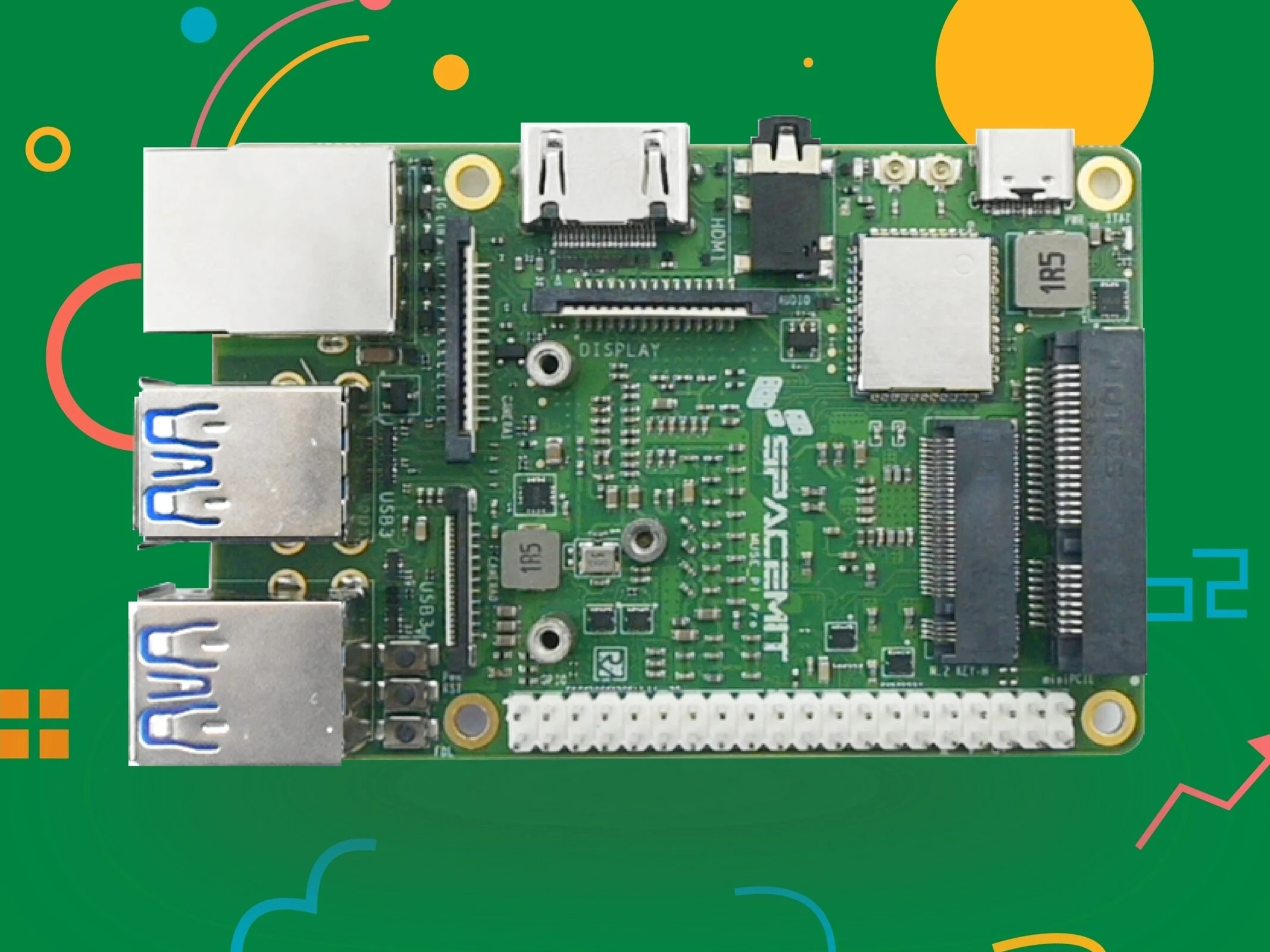

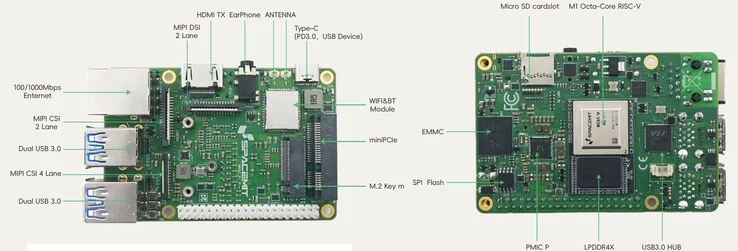

The architecture is meant to enable analogue vector-matrix multiplication (VMM), a key element in machine learning. It achieves this by managing memristor conductance with built-in DACs, PWM signals, and controlled current paths. A functional 2×2 test array was successfully created using standard 28 nm CMOS technology.

By tackling virtual ground concerns and the impact of wire resistance, the design seeks to boost performance reliability and decrease power usage. Compatible with RISC-V control and digital connections, the 2T1R structure may set the stage for scalable neuromorphic chips, allowing for speedier and more compact AI acceleration directly in memory.

Future of AI and Memory

While your hard drive isn’t quite thinking yet, the technology that could make this a reality is already being developed in silicon. This progression hints at a future where AI operates faster and more seamlessly integrated with memory.

Source:

Link