Key Takeaways

1. Meta’s AI can reconstruct sentences from brain activity with 80% accuracy, aiding those who have lost speech.

2. The research uses non-invasive methods (MEG and EEG) to capture brain activity without surgery.

3. Limitations include the need for a magnetically shielded environment and the requirement for participants to remain still.

4. The AI helps understand how the brain translates thoughts into language, revealing a ‘dynamic neural code.’

5. Meta is investing in further research with a $2.2 million donation and partnerships with various European institutions.

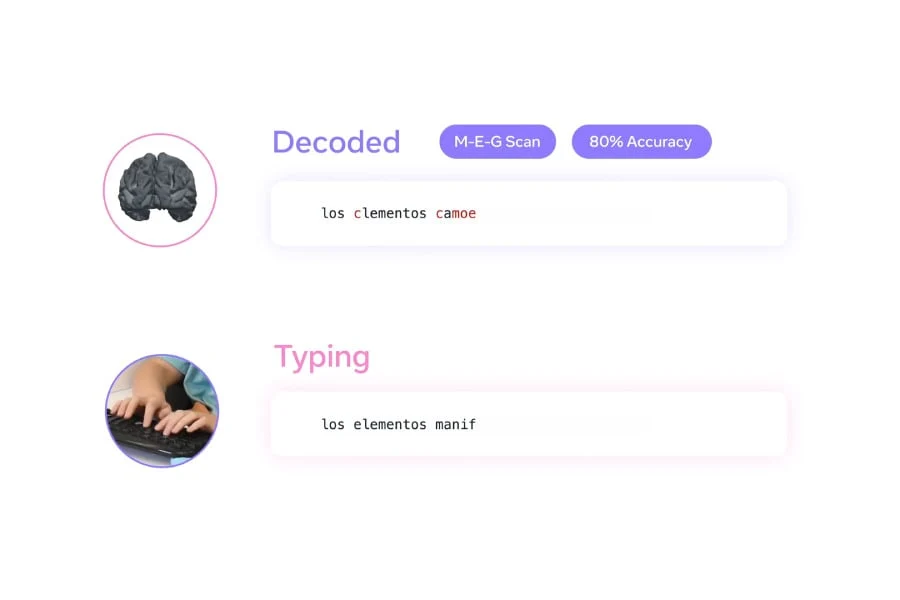

Meta’s AI research team is making strides in understanding human thoughts. In partnership with the Basque Center on Cognition, Brain, and Language, the company has created an AI model that can reconstruct sentences from brain activity with accuracy reaching 80%. This research uses a non-invasive method for recording brain activity and, as stated by the company, could lead to technology that assists those who have lost the ability to speak.

The Technology Behind It

Differing from current brain-computer interfaces that typically need invasive procedures, Meta employs magnetoencephalography (MEG) and electroencephalography (EEG). These methods capture brain activity without any surgery involved. The AI model was trained on recordings from 35 participants while they typed sentences. When faced with new sentences, Meta asserts that it can predict up to 80% of the typed characters using MEG data—this is at least double the effectiveness of EEG-based decoding.

Limitations and Challenges

However, there are certain limitations to this approach. MEG necessitates a magnetically shielded environment, and participants have to remain completely still for precise readings. Furthermore, this technology has only been evaluated on healthy individuals, leaving its performance for those with brain injuries uncertain.

Understanding Word Formation

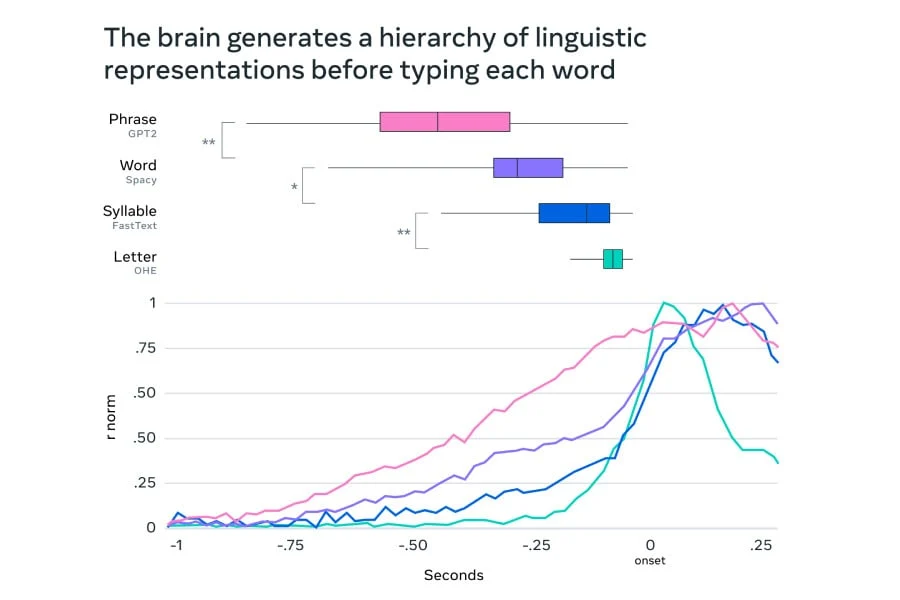

In addition to decoding thoughts into text, Meta’s AI is assisting researchers in comprehending how the brain converts ideas into language. The AI model scrutinizes MEG recordings, observing brain activity in milliseconds. It uncovers how the brain changes abstract thoughts into words, syllables, and even the movements of fingers while typing.

A significant discovery is that the brain utilizes a ‘dynamic neural code,’ which connects various stages of language creation while keeping previous information readily accessible. This might shed light on how individuals effortlessly construct sentences while communicating.

Meta’s research reinforces the idea that AI could eventually facilitate non-invasive brain-computer interfaces for those unable to communicate verbally. Nevertheless, the technology is not yet ready for practical application. There is a need for improved decoding accuracy, and the hardware requirements of MEG limit its usability outside of laboratory environments.

Meta is committed to fostering this research by forming partnerships. The company has pledged a donation of $2.2 million to the Rothschild Foundation Hospital to aid ongoing research. It is also collaborating with institutions such as NeuroSpin, Inria, and CNRS in Europe.

Source:

Link