Key Takeaways

1. Manus AI has launched a new general AI agent that can autonomously seek answers using multiple large language models (LLMs) simultaneously.

2. Traditional chatbots have limitations due to their reliance on specific training datasets and often struggle with complex, problem-solving questions.

3. Manus AI’s agent can break tasks into smaller parts and process them simultaneously, improving problem-solving capabilities.

4. The agent can generate various outputs, including text, spreadsheets, interactive charts, web pages, and video games.

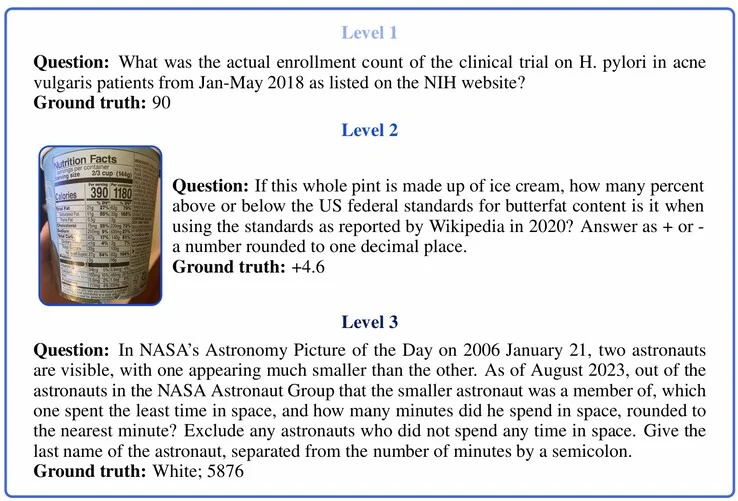

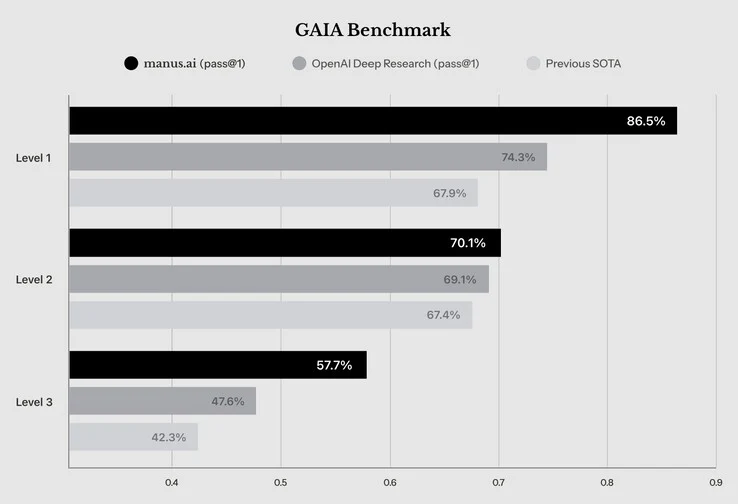

5. Manus AI’s agent scored 57.7% on Level 3 prompts in the GAIA AI benchmark and over 70% on simpler Level 1 and 2 prompts, outperforming other AI research systems.

Manus AI has introduced a new general AI agent that can autonomously seek answers to complicated questions by using multiple large language models (LLMs) at the same time. Right now, interested users can get access by requesting an invitation.

Limitations of Traditional Chatbots

Regular chatbots like OpenAI ChatGPT, Microsoft CoPilot, and Anthropic Claude rely on a specific dataset for their training, which means their knowledge has boundaries. They can’t respond to questions that aren’t included in their training data, even though some companies try to improve this by letting their chatbots browse the Internet for up-to-date info. Nevertheless, these chatbots struggle with complex questions that need problem-solving skills.

New Approaches to Problem Solving

To address this limitation, certain AI companies have allowed their AIs to work through problems step by step, look at data found online, and create an answer. One example is OpenAI Deep Research, which launched last month, and now Manus AI has entered the scene with its new agent.

In contrast to OpenAI’s product, Manus’s agent takes advantage of multiple AI LLMs, gaining benefits from each one. Tasks are automatically divided into smaller parts and handled simultaneously. Users can observe the AI’s process as it systematically tackles problems. This agent can generate not just text responses but also spreadsheets, interactive charts, web pages, and even video games.

Performance Metrics

While Manus AI’s agent achieved a score of 57.7% on Level 3 prompts in the GAIA AI benchmark, which assesses real-world questions that are challenging even for humans, it manages to answer simpler Level 1 and 2 prompts correctly over 70% of the time. Manus AI claims that its agent outperforms other AI systems designed for researching answers currently available.

Source:

Link

Leave a Reply