Key Takeaways

1. Qualcomm has launched its first Snapdragon Experience Zone in India, located at a Croma store in Juhu, Mumbai, to engage local consumers with its technology.

2. The Experience Zone showcases a variety of products, including smartphones, PCs, wearables, and audio gadgets powered by Snapdragon processors, allowing for hands-on experiences.

3. Qualcomm plans to expand the Snapdragon Experience Zones across India to enhance consumer interaction with its technology.

4. Affordable Windows laptops powered by Snapdragon X series SoCs are expected to be available in India for under $600 (around Rs. 50,000), highlighting energy efficiency and AI capabilities.

5. The initiative, dubbed “AI PCs for Everyone,” aims to make AI-enabled devices more accessible and educate consumers about the transformative capabilities of Snapdragon technology.

Qualcomm has unveiled its very first Snapdragon Experience Zone in India, aimed at bringing its innovative technology closer to local consumers. The company is known for pushing the limits of what can be achieved with compact, battery-operated devices. They have addressed the battery life challenges of Windows laptops with their Snapdragon X series chips. Now, alongside their research and development efforts, they are giving people the opportunity to engage with the technology available to them.

Collaboration with Croma

In partnership with Croma, a prominent consumer electronics retailer, the Experience Zone has opened its doors at the Croma Store located in Juhu, Mumbai. Qualcomm reportedly intends to create additional zones throughout India, allowing customers to discover and interact with devices that utilize Snapdragon processors.

A Variety of Showcased Products

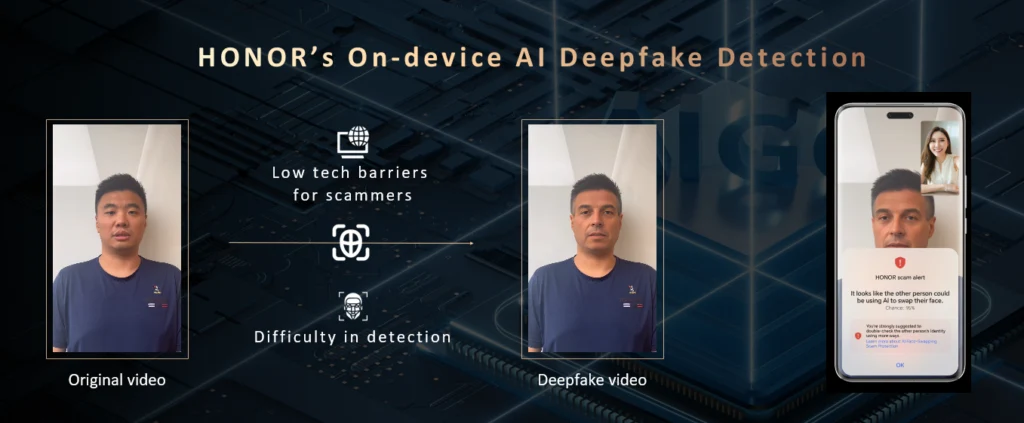

The Snapdragon Experience Zone features a diverse array of ecosystem products, including smartphones, PCs, wearables, and audio gadgets, all powered by Qualcomm’s state-of-the-art processors. Guests can participate in a hands-on experience with these devices, seeing the capabilities of Snapdragon chips up close, especially their on-device AI functionalities. Knowledgeable staff are also present to demonstrate products and address user questions, ensuring that visitors leave with a more thorough understanding of the technology.

Savi Soin, President of Qualcomm India, stressed the importance of this initiative, stating, “This allows customers to experience the transformative power of on-device AI, showcasing the cutting-edge capabilities of the Snapdragon ecosystem.”

Pricing and Future Developments

Windows laptops that run on the Arm-based Snapdragon X series SoCs are available for under $600 (around Rs. 50,000) globally, and a similar pricing approach is expected for the Indian market as well. Besides the enhanced energy efficiency, the NPU capabilities of Snapdragon-powered laptops also make them suitable for AI processing, which is increasingly relevant as the industry moves toward deeper technology integration. Recently, it was reported that Microsoft is developing a streamlined version of DeepSeek-R1, with Snapdragon-powered laptops being the first to gain from this advancement.

The event, named “AI PCs for Everyone,” showcases Qualcomm’s commitment to making AI-enabled devices more accessible to a larger audience. With plans to further expand Snapdragon Experience Zones across India, Qualcomm seeks to bolster its presence in the country and educate consumers about the transformative possibilities of its technology.

Source:

Link