Key Takeaways

1. DeepSeek introduced the DeepSeek-V3-0324, an updated version of its V3 AI large-language model with improved performance and reduced hardware requirements.

2. The V3 model is a non-reasoning AI designed for quick answers, featuring 685 billion parameters, making it one of the largest publicly available LLMs.

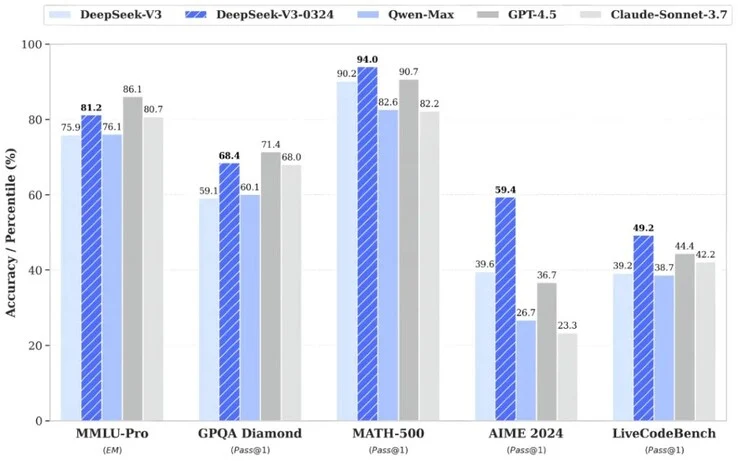

3. The updated model scored between 5.3% and 19.8% higher on AI benchmarks compared to its predecessor, competing well with other AIs like GPT-4.5 and Claude Sonnet 3.7.

4. Enhanced capabilities include improved web page design, user interface creation for online games, and better skills in Chinese searching, writing, and translating.

5. To run the full DeepSeek-V3-0324 model, users need 700 GB of storage and Nvidia A100/H100 GPUs, while smaller versions can operate on a single Nvidia 3090 GPU.

DeepSeek, a Chinese artificial intelligence firm, has introduced the DeepSeek-V3-0324, an updated iteration of its V3 AI large-language model (LLM), which was first unveiled in December 2024. This V3 model astonished many due to its significantly reduced hardware needs for training, quicker training durations, and lowered API expenses, while still delivering high performance compared to rival AIs like OpenAI’s GPT models.

Key Features of the V3 Model

The revised V3 model is classified as a non-reasoning AI, which means it focuses on providing quick answers without taking extra time to ponder complex issues, in contrast to DeepSeek’s R1 model. With a massive size of 685 billion parameters, it ranks among the largest publicly accessible LLMs. Users can leverage the latest model under the MIT License.

DeepSeek-V3-0324 has shown improvements, scoring between 5.3% and 19.8% higher on AI benchmarks compared to the previous V3 version. Its performance stands alongside other leading AIs, including GPT-4.5 and Claude Sonnet 3.7.

Enhanced Capabilities

The updated model brings several enhancements. One notable upgrade is its enhanced ability to design attractive web pages and user interfaces for online games. Additionally, its skills in searching, writing, and translating in Chinese have seen significant improvements.

For those interested in testing the complete 685B DeepSeek-V3-0324, it is necessary to have at least 700 GB of available storage and several Nvidia A100/H100 GPUs. However, smaller and distilled versions of the model can be operated on a single GPU, like the Nvidia 3090 (available for purchase on Amazon).

Source:

Link

Leave a Reply