Artificial Intelligence is rapidly changing the landscape, with LLMs (Large Language Models) gaining significant traction due to their wide range of uses. ChatGPT stands out as a notable AI model, alongside new innovative entrants like DeepSeek from China. However, India is now stepping up to the plate, aiming to introduce its own AI model, potentially launching within this year.

Indian Government’s Initiative for Affordable AI

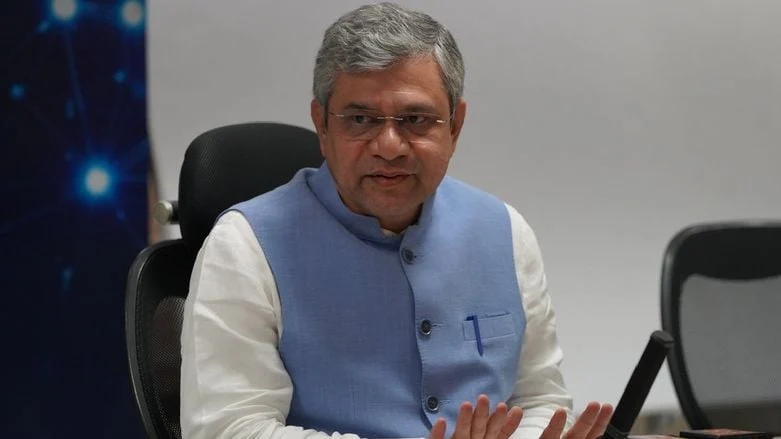

At a recent AI conference, Ashwini Vaishnaw, who is the Union Minister of Electronics and Information Technology, announced that the Indian government is in the process of developing its foundational AI model. He emphasized that this model will offer functionalities similar to those of DeepSeek and ChatGPT, but at a much lower development cost. The minister mentioned that this new AI solution could be ready in approximately 8 to 10 months.

Focus on Local Needs and Inclusivity

During the event organized by the Indian AI Mission, Ashwini Vaishnaw disclosed that researchers in the country are crafting an AI ecosystem framework aimed at supporting this foundational AI model. The goal is to create an experience that caters specifically to Indian users, addressing their linguistic and contextual needs. This initiative seeks to promote inclusivity while working to remove biases found in existing models.

Computational Strength Behind the AI Development

The Union Minister also highlighted India’s computational capabilities, noting that the domestic AI model is being developed using a facility equipped with 18,693 GPUs. In comparison, ChatGPT was trained using around 25,000 GPUs, while DeepSeek utilized 2,000 GPUs for its development.

Cost Comparison with Existing AI Models

Typically, using a well-known AI model like ChatGPT might cost about $3 per hour, but the Indian AI model is expected to be priced at just Rs 100 (approximately $1.15), thanks to government subsidies. This announcement comes on the heels of UC Berkeley researchers successfully replicating DeepSeek AI for a mere $30.