Key Takeaways

1. Samsung has launched the Exynos 2500 SoC with a 10-core CPU and Xclipse GPU based on AMD’s RDNA 3 technology.

2. The CPU features a Cortex-X925 core at 3.3 GHz, two Cortex-A725 cores at 2.76 GHz, five Cortex-A725 cores at 2.36 GHz, and a Cortex-A520 core at 1.80 GHz.

3. The Xclipse 950 GPU offers a 28% increase in frame rates compared to the Exynos 2400 and supports 4K/WQUXGA displays at up to 120 Hz.

4. The Exynos 2500 supports a 320 MP camera, 8K video recording at 30 FPS, and features a dual NPU that is 90% faster than the previous model.

5. Manufactured using a 3 nm process, the Exynos 2500 is designed for better energy efficiency but may face performance challenges due to having only one E-core.

Samsung has recently unveiled its new Exynos 2500 SoC, just before its expected launch with the Galaxy Z Flip 7. This announcement confirms previous rumors and provides additional information about its internal components. As indicated in an earlier Geekbench report, the chip features a 10-core CPU and an Xclipse GPU that utilizes AMD’s RDNA 3 technology.

CPU Details

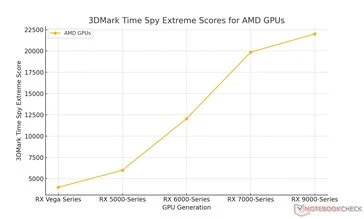

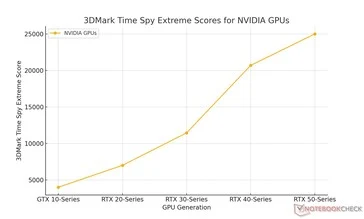

The 10-core CPU includes a Cortex-X925 running at 3.3 GHz, two Cortex-A725 cores operating at 2.76 GHz, five more Cortex-A725 cores at 2.36 GHz, and a single Cortex-A520 core that works at 1.80 GHz. According to Samsung, the Xclipse 950 GPU, which is based on AMD’s RDNA 3 architecture, has 8 WGPs (which is equivalent to 16 CUs). This setup provides a 28% increase in frame rates when compared to the Exynos 2400. Additionally, the GPU is said to support a 4K/WQUXGA display with a refresh rate of up to 120 Hz.

Camera and Video Capabilities

Other features of the Exynos 2500 include support for a 320 MP camera, setting the stage for upcoming sensors that could potentially achieve this resolution. It is also capable of recording video at a maximum of 8K 30 FPS. Similar to the Exynos 2400, the 2500 is equipped with a dual NPU that is claimed to be 90% faster than the previous model. In terms of connectivity, it boasts Wi-Fi 7, Bluetooth 5.4, 5G, and satellite capabilities.

Manufacturing and Power Efficiency

The Exynos 2500 marks Samsung’s first smartphone SoC to be made using its 3 nm (likely 3 GAP) process. It is compatible with LPDDR5X memory and UFS 4.1 storage. On paper, it should be more energy-efficient than the Exynos 2400, thanks to its GAAFET-based manufacturing. However, having only one E-core may complicate its performance in real-world scenarios.

Source:

Link