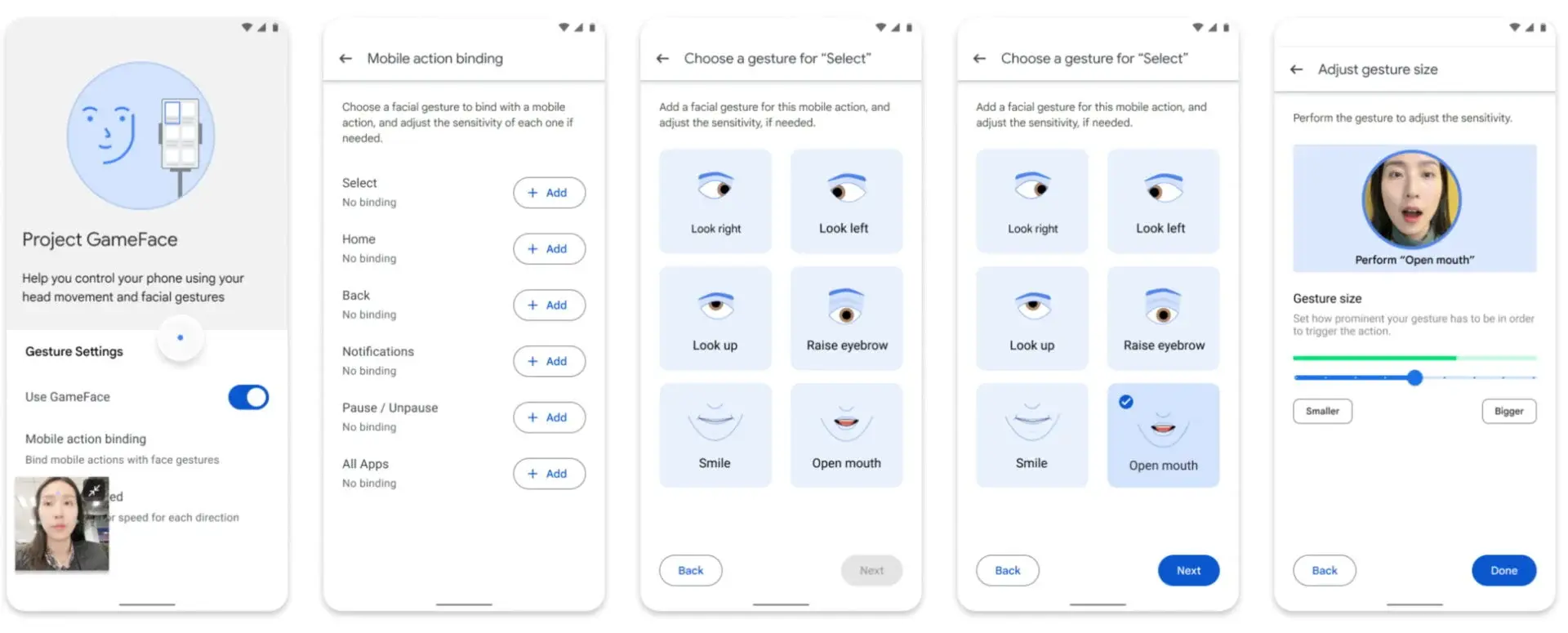

Google announced on Tuesday that it has made the code for Project Gameface, a hands-free gaming “mouse” controlled by facial expressions, open-source for Android developers. This move enables developers to incorporate this accessibility feature into their applications. Users can manipulate the cursor using facial gestures or head movements, such as opening their mouths to move the cursor or raising their eyebrows to click and drag.

Utilizes Device Camera and Facial Expressions Database

Originally unveiled for desktops during last year’s Google I/O, Project Gameface leverages the device’s camera along with a database of facial expressions from MediaPipe’s Face Landmarks Detection API to control the cursor.

“Through the device’s camera, it seamlessly tracks facial expressions and head movements, translating them into intuitive and personalized control,” explained Google in its announcement. “Developers can now build applications where their users can configure their experience by customizing facial expressions, gesture sizes, cursor speed, and more.”

Expanding Beyond Gaming

Initially designed for gamers, Google has teamed up with Incluzza, a social enterprise that focuses on accessibility in India, to explore extending Gameface to other scenarios such as work, school, and social activities.

The inspiration behind Project Gameface came from Lance Carr, a quadriplegic video game streamer with muscular dystrophy. Carr partnered with Google on the project, aiming to create an affordable and accessible alternative to costly head-tracking systems.

Leave a Reply