Key Takeaways

1. Nvidia’s RTX 40 series is facing serious issues with drivers starting from version 572.xx, causing black screens and instability.

2. Hotfixes are being released, but many problems remain unresolved, leading to user frustration.

3. Rolling back to driver version 566.36 resolves issues for some RTX 40 series users, but they lose access to new features like DLSS 4.

4. Popular games are experiencing compatibility issues with the new drivers, prompting developers to recommend reverting to older driver versions.

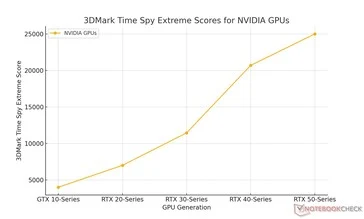

5. Nvidia has not publicly acknowledged the ongoing problems for RTX 30 and 40 series users, focusing more on the RTX 50 series.

Nvidia’s RTX 40 series is facing serious issues with the latest drivers, starting from version 572.xx. Problems began surfacing in February, with users experiencing black screens and other stability problems.

Ongoing Troubles

Nvidia is trying to address these issues by releasing hotfixes, but many of the problems remain unresolved. Users of the RTX 30 and 40 series have expressed frustration, feeling that the company is focusing more on the RTX 50 series, where these issues seem to be less prevalent.

User Concerns

A Reddit user named “Scotty1992” shared a detailed list of grievances from RTX 30 and 40 series users. Rolling back to the 566.36 drivers appears to resolve the issues for those with the 40 series, but they lose access to new features like DLSS 4.

Game Compatibility Issues

Popular games such as Cyberpunk 2077, Alan Wake 2, and Indiana Jones and The Great Circle are reportedly experiencing problems with the new drivers, including stuttering and BSOD crashes.

It seems that game developers are also advising users to uninstall Nvidia’s latest drivers. Developers from InZoi and Neople are encouraging RTX 40 series users to revert to the 566.36 driver version from December of last year. Owners of RTX 50 series and RTX 30 series or older are recommended to keep the new drivers.

Conclusion

Although Nvidia has been providing fixes for RTX 50 series users, they have not publicly acknowledged the ongoing problems for older GPUs. Until these issues are resolved, RTX 40 users may have to pick between stability and newer DLSS features.

Source:

Link