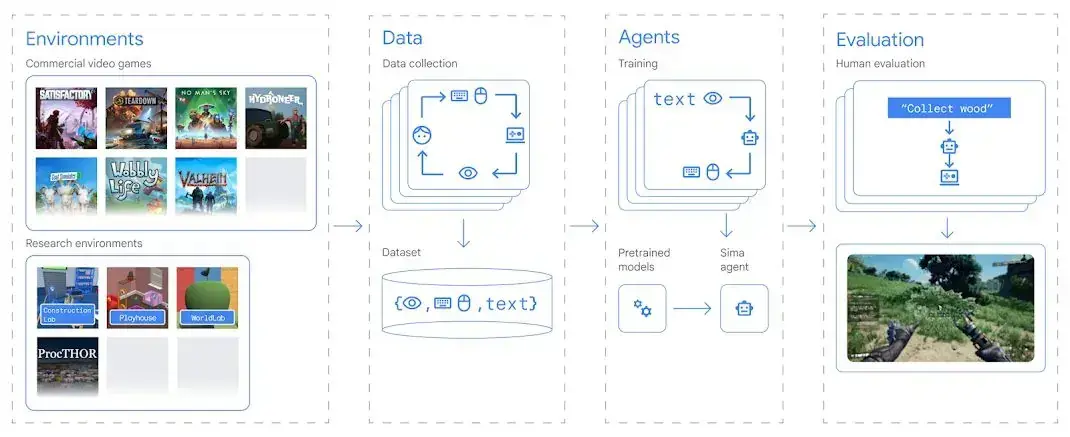

Google DeepMind, a research branch of Google focused on AI, has introduced Genie 2, a foundational world model capable of creating "action-controllable, playable 3D environments" for fast prototyping and training AI agents.

Advanced Capabilities

According to the company, Genie 2 enhances the abilities of its earlier version and can produce "a vast diversity of rich 3D worlds." It’s capable of simulating interactions between objects, animations for characters, physics, and Non-Playable Characters (NPCs) along with their animations and interactions. This model can take both text and visual cues as input.

Memory and Perspective

Genie 2 is designed to remember elements of the world that aren’t visible to the player and can render them when they become visible again. This is akin to the Level of Detail (LOD) technique used in gaming, which adjusts the complexity of the objects and environments based on the player’s Field Of View (FOV).

The model can create new content in real-time and keep a stable world "for up to a minute." It also offers the ability to render environments from various viewpoints, such as first-person, third-person, or isometric perspectives.

Realistic Effects

Additionally, it can produce sophisticated effects, including smoke, object interactions, fluid dynamics, gravity, and advanced lighting and reflections. DeepMind claims this model can facilitate the quick prototyping of fresh concepts and ideas. Users can also create and manage AI agents with straightforward prompts.

Numerous companies are developing foundational world models that can simulate and build representations of environments. For instance, Decart’s Oasis allows users to engage with a real-time AI-generated version of Minecraft, while AI leader Fei Fei Li’s start-up, World Labs, also features a 3D generator.

Google DeepMind’s contributions are setting a new standard in the realm of AI and simulated environments.