Key Takeaways

1. Google has launched experimental versions of its Gemini, including 2.0 Flash, 2.0 Pro, and Personalisation, available on Android, iOS, and the web.

2. Gemini 2.0 Flash offers faster performance and better efficiency compared to the older version, while details on 2.0 Pro enhancements are not specified.

3. The Personalisation model uses users’ Google Search history for more customized and accurate responses, improving user experience.

4. The Deep Research feature, previously limited to Gemini Advanced users, is now free for all users, providing in-depth, easy-to-understand reports on complex subjects.

5. These updates empower users with free access to advanced AI tools for both personal and work-related tasks, with ongoing enhancements expected in the Gemini AI ecosystem.

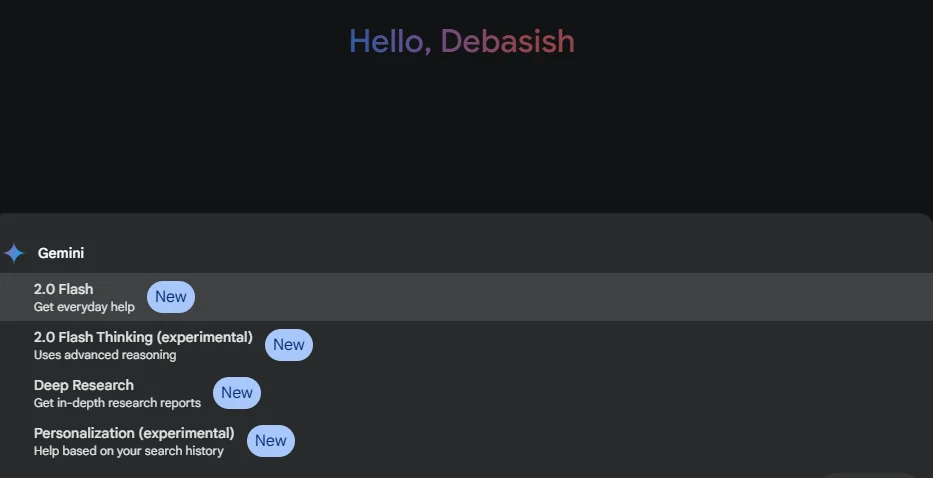

Google has started to launch new experimental versions of its Gemini, which includes 2.0 Flash (experimental), 2.0 Pro (experimental), and Personalisation (experimental). This is accessible on Android, iOS, and the web as well. Additionally, the tech giant has made its Deep Research feature free for all users on these platforms.

What’s New?

The upgraded models offer improved functionality and efficiency. The Gemini 2.0 Flash (experimental) is said to provide faster performance and better efficiency than the older version, although Google hasn’t shared specific details about the enhancements in 2.0 Pro (experimental).

The Personalisation (experimental) model takes advantage of users’ Google Search history to give more customized and accurate answers. This feature can significantly enhance the user experience since it will have more context regarding your preferences.

Deep Research Feature Now Accessible

Deep Research, which was previously limited to Gemini Advanced users, is now available to everyone due to this update. This tool uses AI to delve into complex subjects and produce thorough, easy-to-understand reports. It’s a fantastic resource for learning the essentials of a topic without spending hours looking for relevant information online. Now, all users can find this feature in the drop-down menu at the top of the Gemini interface.

Reports have indicated that early access to these updates has been seen on devices like the Galaxy S23 in India and the Galaxy S25, with the latter enjoying the benefits of the Google One AI Premium plan provided by Google and Samsung. Users can now try out the new models and take advantage of the Deep Research capabilities without any extra charges.

Empowering Users with Free Features

By making these features available for free, Google is allowing users to utilize advanced AI for both personal and work-related tasks. Keep an eye out for more updates as Google continues to enhance and develop its Gemini AI ecosystem.

Source:

Link