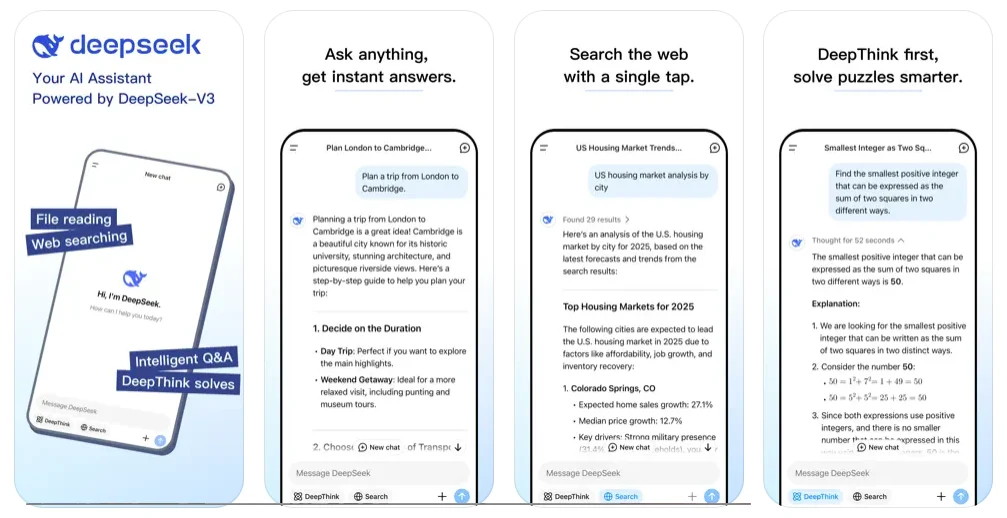

From November 2023, DeepSeek, a Chinese firm, has started to roll out its AI models as open-source. With the MIT license in place, anyone can utilize and modify the model for personal use. This openness promotes transparency and flexibility in how these models can be applied.

Collaborative Development

Moreover, the open-source nature fosters teamwork in development and helps save costs. Users have the ability to inspect the code, allowing them to comprehend the model’s operations. They can tailor the model to meet their unique needs and employ it across various scenarios. By embracing open-source, DeepSeek contributes to innovation and competition within the AI landscape.

Company Background

DeepSeek is a spin-off from Fire-Flyer, the deep-learning division of the Chinese hedge fund, High-Flyer. The primary aim was to enhance the understanding, interpretation, and prediction of financial data within the stock market. Since its establishment in 2023, DeepSeek has shifted its focus solely onto LLMs, which are AI models that can generate text.

Major Breakthroughs

The company appears to have made significant advances with the latest additions to the DeepSeek AI lineup. Based on popular AI benchmarks, DeepSeek-V3, DeepSeek-R1, and DeepSeek-R1-Zero frequently surpass rivals from Meta, OpenAI, and Google in their specific areas. Additionally, these services are notably cheaper than ChatGPT.

Impact on Pricing

This competitive pricing tactic could influence pricing trends across the AI market, making sophisticated AI technologies more accessible to a broader audience. DeepSeek is able to maintain these lower costs by investing much less in training its AI models compared to others. This is primarily achieved through more streamlined training processes and extensive automation.

Efficiency in Reasoning Models

Conversely, DeepSeek-R1 and DeepSeek-R1-Zero function as reasoning models. They begin by formulating a strategy to answer inquiries before proceeding in smaller increments. This method enhances result accuracy while requiring less computational power. Nevertheless, it does increase the demand for storage space.

Accessibility of Models

As an open-source AI, DeepSeek can operate directly on users’ computers. Users can access the necessary application data without cost, as the models are freely downloadable from Hugging Face. Tools like LM Studio simplify the process by automatically fetching and installing the full application code.

Data Security and Privacy

This setup ensures data privacy and security, as prompts, information, and responses remain on the user’s device. Furthermore, the model can function offline. While high-end hardware isn’t essential, ample memory and storage are necessary. For example, DeepSeek-R1-Distill-Qwen-32B needs approximately 20GB of disk space.

Language Capabilities

Per DeepSeek V3, the AI is capable of handling multiple languages, including Chinese and English, as well as German, French, and Spanish. In brief interactions, the various languages yielded satisfactory replies.

Concerns Regarding Censorship

However, there are lingering concerns about censorship in China. DeepSeek-R1 incorporates restrictions on certain politically sensitive subjects. Users attempting to inquire about specific historical events may receive no response or a “revised” reply. For instance, asking about the events at Tiananmen Square on June 3rd and 4th, 1989 may not yield clear information.

Censorship in AI Models

That said, DeepSeek R1 does acknowledge the student protests and a military operation. Yet, other AI systems also limit their responses to political inquiries. Google’s Gemini, for instance, outright avoids addressing questions that may pertain to politics. Thus, (self-imposed) censorship is a common trait found in various AI models.

Source:

Link