Key Takeaways

1. Garmin Connect will integrate with Android’s Health Connect in June 2025, allowing data synchronization across devices.

2. At Google I/O 2025, Garmin reported a 50% increase in active users and announced collaborations with multiple fitness apps.

3. New features for Health Connect include Medical Records APIs and two new data types: Background Reads and History Reads.

4. Users will have control over data sharing through a smartphone dashboard, enabling them to manage what data is shared and delete it if needed.

5. The exact launch date for the Garmin and Health Connect integration has not yet been specified.

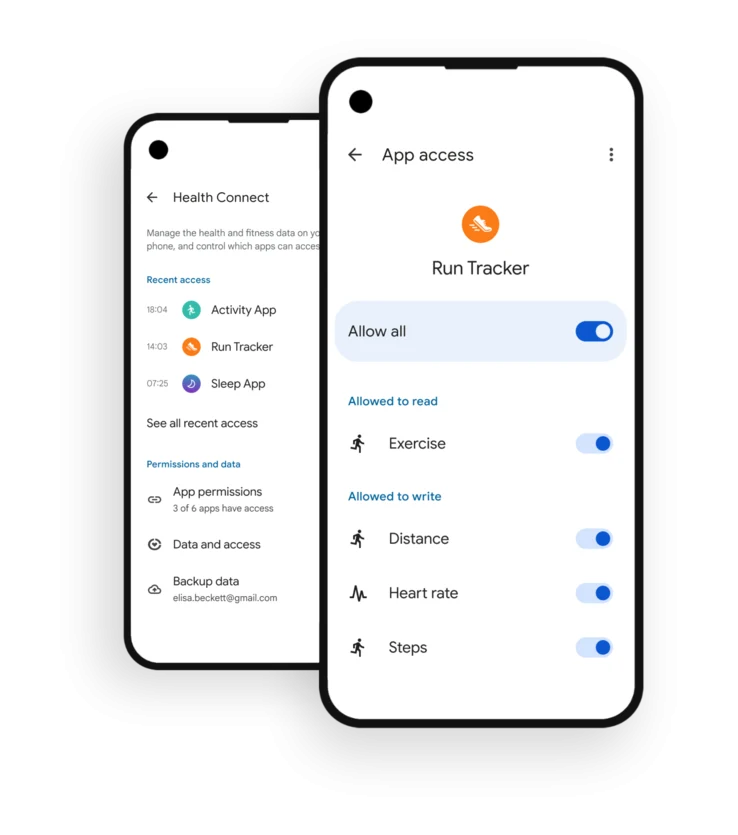

Garmin Connect is set to soon join forces with Android’s Health Connect. This Google service lets users easily share their health information across different apps and devices, which can give you a wider perspective on your health and may help you identify larger trends.

Announcement at Google I/O

During the Google I/O 2025 developer conference, it was announced that Garmin would become part of the Health Connect network in June 2025. This development will enable users of Garmin smartwatches to synchronize their workout and health data with other products within the Android ecosystem. Some apps that are already connected to Health Connect include AllTrails, Dexcom G7, Peloton, Oura, and Withings; you can find the complete list on the Google Play Store.

User Growth and New Features

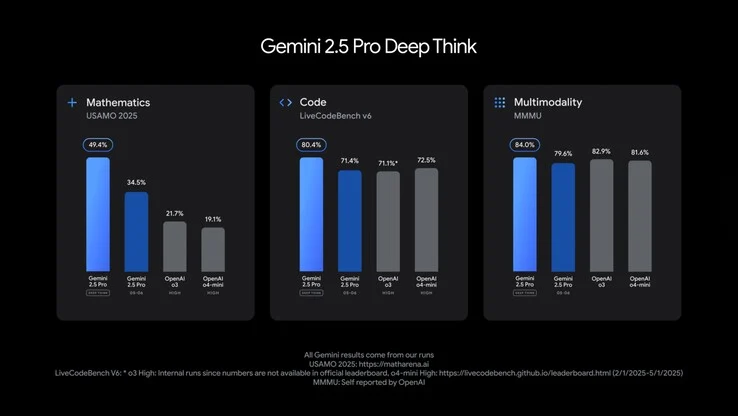

The company highlighted that it has experienced a 50% increase in active users over the past six months and pointed out recent collaborations with Flo, Runna, and Mi Fitness. It also shared information about new features for Health Connect, such as Medical Records APIs that work with the new Fitbit Medical record navigator. Additionally, there are two new data types being introduced: Background Reads, which help developers deliver timely insights to users, and History Reads, which highlight long-term trends in health data.

User Control Over Data Sharing

Android users will have the ability to control what data is shared between apps through a dashboard on their smartphones. From this dashboard, they can also delete data or decide to prioritize specific data sources over others. However, Garmin and Google have not yet provided a specific date for when the integration will officially launch.

Source:

Link