Google has rolled out access to its latest AI, the Gemini 2.0 Pro experimental model. This new AI features a massive two million token input window, the largest of any Google AI to date, allowing it to manage very large text inputs. Gemini is engineered to tackle complicated prompts with these extensive inputs. Furthermore, Gemini 2.0 Pro has the ability to browse the internet and run code, while also being capable of generating code for applications.

Performance Compared to Other Models

In terms of performance, Gemini 2.0 Pro surpasses previous AI models from the company across various standardized large language model benchmarks. Nevertheless, it still hasn’t reached the capabilities of humans or the top-performing AIs in every category evaluated. For instance, on the LiveBench AI LLM benchmark, the experimental scores for Gemini 2.0 Pro are only 65.13, compared to Deepseek R1’s 71.57 and OpenAI’s o3-mini which scored 75.88 in high mode.

Human Evaluation and Security Measures

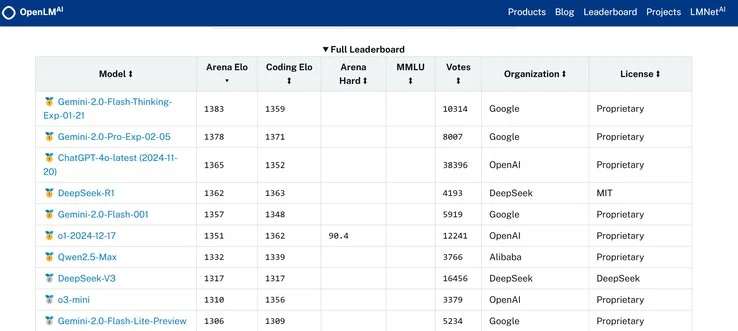

Even so, when human evaluators assess AI based on their own prompts, Gemini 2.0 Pro stands out as one of the top two AIs globally today, according to the responses it provided on the OpenLM.ai Chatbot Arena Elo ranking. Hackers may find themselves frustrated with Gemini 2.0 Pro, as it utilized self-training methods during development to minimize the chances of producing unsafe responses.

Subscription and Availability

Gemini 2.0 Pro is accessible to all users of Google Gemini Advanced who subscribe for $19.99 monthly. It is also available for developers using Google AI Studio and Vertex AI. Users interested in having Gemini at their fingertips can download the Gemini app on their smartphones or buy a Google Pixel 9 Pro smartphone that comes with Gemini integrated (available for purchase on Amazon).

Source:

Link