Key Takeaways

1. Claude Opus 4 is the most advanced model, capable of handling complex tasks for up to seven hours autonomously.

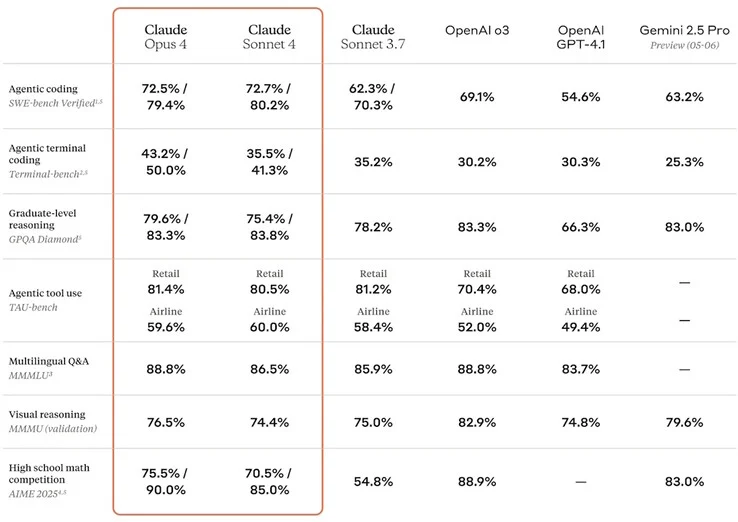

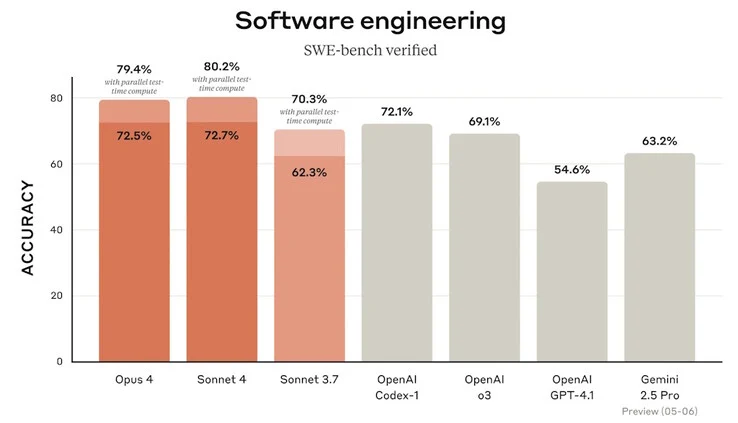

2. Both Opus and Sonnet models have improved coding accuracy, assisting developers in building applications.

3. The models can generate Python code for data analysis and visualization, enhancing business efficiency.

4. Claude Opus 4 is equipped with AI Safety Level 3 standards to address potential misuse risks.

5. Tools like Plaude Note and Plaude NotePin help automate summarization and transcription for meetings and classes.

Anthropic has introduced its latest AI models, Claude Opus 4 and Claude Sonnet 4, which come with enhanced accuracy, capabilities, and performance levels.

Opus Model Features

Opus stands out as the company’s most advanced model, designed to tackle complex challenges continuously for long periods. Initial users have reported that it can handle programming tasks autonomously for up to seven hours. Additionally, this AI has improved memory for inputs and outcomes, leading to more accurate responses. Meanwhile, Sonnet serves as a general model that provides quick replies to standard prompts. Both models have made strides in coding accuracy, assisting developers in building modern applications.

Data Analysis Capabilities

These models can also function as data analysts, generating Python code to analyze and visualize data sets. New API features allow businesses to develop tailored applications that integrate Claude, enhancing business data analysis and operational efficiency. The Claude Code feature enables the AI to work within popular integrated development environments (IDEs) such as VS Code and JetBrains, helping programmers improve their coding practices.

Safety Measures Implemented

As a precautionary measure, Anthropic has activated its AI Safety Level 3 (ASL-3) Deployment and Security Standards for Claude Opus 4. The company is still considering the potential risks associated with the AI, including the possibility of it being misused for creating dangerous items like chemical, biological, radiological, and nuclear (CBRN) weapons.

For those looking to harness the power of Anthropic AI in their daily tasks, tools like Plaude Note and Plaude NotePin can automatically summarize and transcribe classes and meetings. Individuals working remotely can also communicate with Claude by downloading the Anthropic app for their laptops and smartphones.

Source:

Link